DO WE EVER ASK WHERE AI GETS ITS DATA FROM? Acknowledging BIAS in AI

When we interact with generative AI whether asking it to draft an email or write an outline we rarely stop to consider where it’s pulling all this information from. The truth is, generative AI models are trained on massive datasets from across the internet: books, articles, social media posts, websites, forums, and more. And we all know these sources are often not neutral!

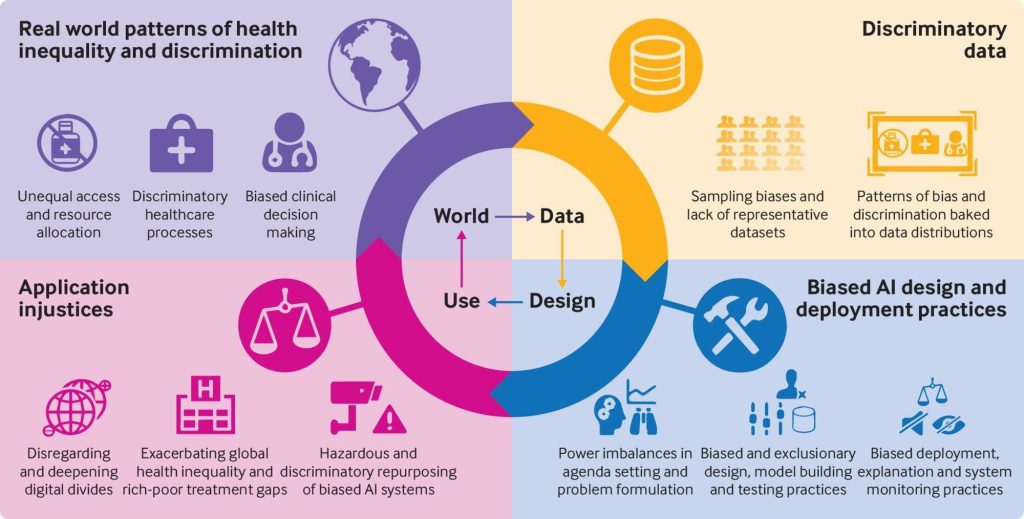

They reflect the dominant voices of the web, which means they’re often skewed leading to underrepresentation, misrepresentation, or overrepresentation. If the AI is trained on biased, incomplete, or unbalanced data, it can easily reproduce those same patterns in the content it generates.

By ignoring where the data comes from and who curates it, we risk embedding the same inequities into the tools we use every day.

Bias in AI isn’t just a technical flaw it’s a mirror. It reflects societal structures, historical inequities, and the power dynamics of those who build and control technology. But the good news is, once we see the mirror, we can choose what to do with it!

So the next time you’re impressed by a perfectly worded AI response or a stunning AI-generated image, ask yourself: Whose story is it telling and whose story is it missing?

What Steps Can I take to ensure awareness of bias and transparency?

- Understand the Source of the AI Tool

- Research who built the tool, how it was trained, and what datasets were used, look for transparency.

- Question the Output

- Don’t take AI-generated content at face value. Cross question it! Whose perspective is this? What might be missing or misrepresented?

- Be alert and wary of stereotypes, cultural assumptions, and generalizations from your own community or others around you.

- Diversify Your Prompts

- Ask different questions to the same idea to see if different prompts generate different responses and what that means.

- Fact-Check and Cross-Reference

- Use credible human sources to verify AI content, especially when accuracy, representation, or inclusivity matters.

- Involve Human Oversight

- Always pair AI-generated content with human judgment and ethical reflection of your own.

- Disclose AI Involvement

- Be transparent when content is AI-assisted whether in writing, visuals, or decisions. This builds trust and accountability. Look at ‘Citing AI’ to learn more

- Advocate for Explainability

- Push for AI tools that offer insight into how they generate outputs and why they make certain choices. Ask why this resource was used over another?

- Stay Educated on AI Ethics

- Engage with resources on AI fairness, accountability, and transparency. Follow researchers, read papers, subscribe to newsletters and have conversations.