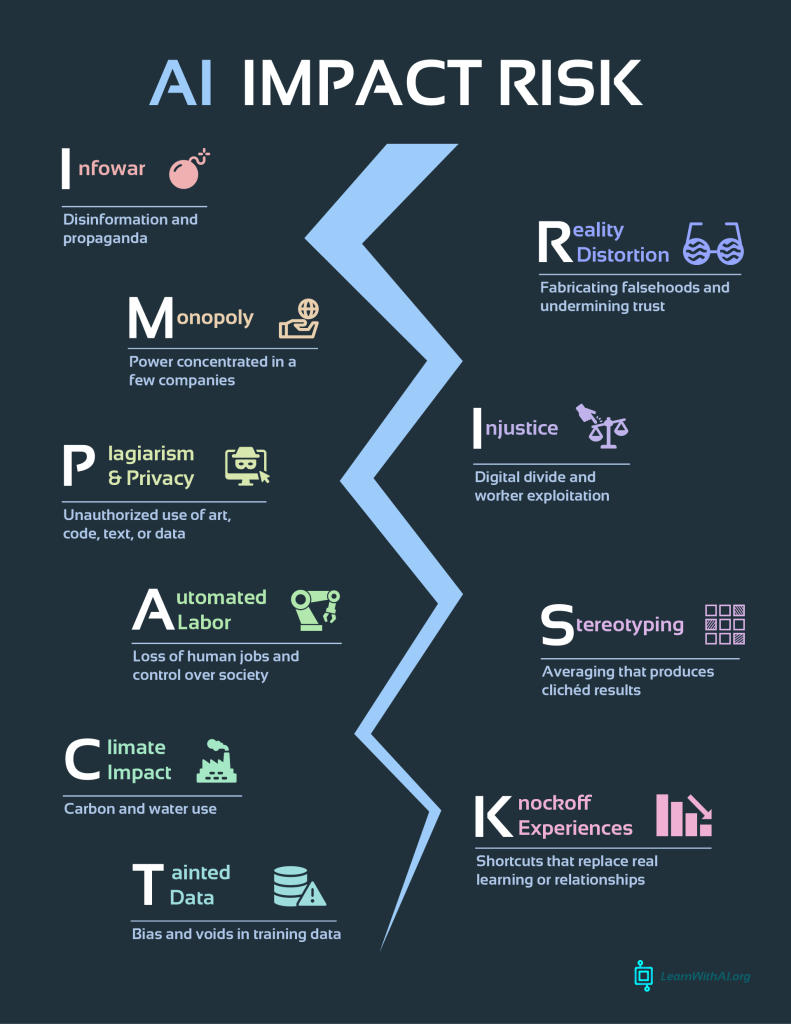

While some individuals are enthusiastically adopting AI tools for a variety of uses, others have strong moral concerns about using these tools. It’s important to recognize that the creation and use of these tools can have negative impacts on our society on a larger scale

AI is shaping our everyday lives, job markets, power structures and future direction. The key to responsible use is how much we are in charge and how much we are focusing on our agency as learners, educators, policy makers as the next generation.

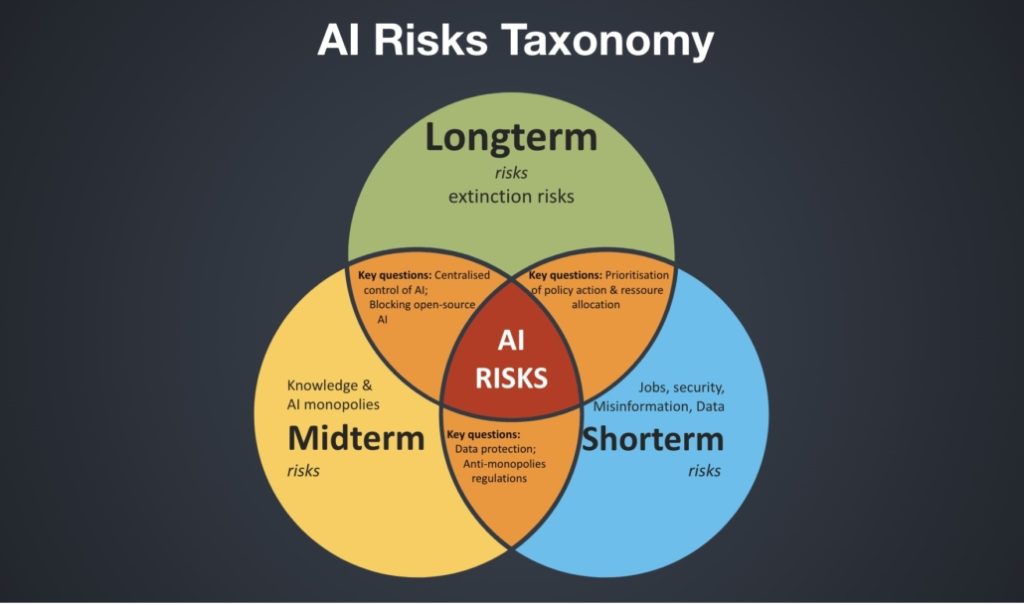

Should we become masters of open-source models or enforce stricter data protections? How do we prioritize today’s urgent problems without losing sight of tomorrow’s catastrophic ones?

AI poses layered risks: short-term threats like job displacement, data privacy breaches, and the alarming rise of deepfakes. This realistic but fake content that blurs the line between fact and fiction, threatening democracy and public trust is just one example. These aren’t hypotheticals anymore; they’re shaping elections, news cycles, and reputations right now. (like this fake news reporter who went viral?! or do you even know if you were interviewed by a deepfake made recruiter?)

In the mid and long term, society must grapple with growing AI monopolies where a few tech giants hold disproportionate power over access to knowledge, language models, and innovation. What happens when the tools that shape our reality are controlled by just a handful of entities? How do we ensure transparency and equity when open-source efforts are no longer the majority?