Co-authored by Andrew Hooper & Nafis Hasan

In a remarkable display of bipartisanship, the Senate passed HR 34 and President Obama signed the 21st Century Cures Act into law on Dec. 13, 2016. The original bill was introduced and sponsored by Rep. Suzanne Bonamici (D-OR) on Jan 2015 and garnered co-sponsors from both sides of the aisle, including the support of Rep. Lamar Smith (R-TX), Chairman of the House Committee on Space, Science and Technology. The House approved the original bill in Oct 2015 and after a year on the Senate floor where the bill underwent several amendments proposed by both Democrats and Republicans, the Senate approved the bill on Dec 6 2016 and passed the bill on to President Obama to be signed into law.

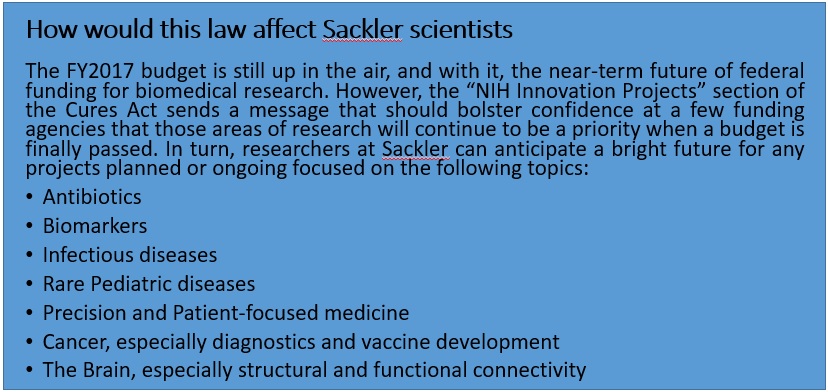

This law is meant to accelerate drug development and bring cutting edge treatment to patients, revise the current status of mental health research and treatment for disorders, with a strong focus on the current opioid crisis sweeping across the nation. The law is also of significant importance to biomedical scientists as it will expand funding for certain fields, keeping in line with the Precision Medicine Initiative launched in 2015. More specifically, the Cures act will provide funding for specific NIH innovation projects such as the Precision Medicine Initiative ($4.5 billion through FY 2026), the BRAIN initiative ($1.51 billion through FY 2026), the Cancer Moonshot project ($1.8 billion through FY 2023) and the Regenerative Medicine (stem cells) program (30$ mn through FY 2026). In addition, this law will stimulate innovative research by awarding investigators with the Eureka Prize for “significant advances” or “improving health outcomes”. The law also seeks to promote new researchers through its Next Generation of Researchers Initiative, an attempt to solve the postdoc crisis in academia. As a response to the lack of women and underrepresented minorities in STEM fields, the law also contains provisions that will attract and retain such scientists in “priority research areas”. Finally, to further encourage early-stage researchers, the law authorizes the establishment of programs to help in the repayment of student loans and raises the cap on the repayment assistance available to the researchers.

Besides ensuring funding for biomedical research, this law aims to address privacy concerns brought up by experts regarding patient information in the era of precision medicine (for more details, check out our analysis of the precision medicine initiative). Under this law, certificates of confidentiality will be provided to all NIH-funded researchers whose studies involve collection of sensitive patient information. This information will be withheld by the NIH, but can be accessed upon requests filed under the Freedom of Information Act. On the other hand, in order to make sure data sharing is made easier for scientists, this law will allow NIH to break out of red tape and regulations that obstruct scientists from attending scientific meetings and sharing data.

Despite the generally positive reception of the Cures Act by NIH officials and research scientists, the bill was not without its critics. The principal criticism of the final product is that it constitutes a handout to pharmaceutical and medical device companies by substantially weakening the FDA’s regulatory check on bringing new treatments into the clinic.

For example, Sydney Lupkin and Steven Findlay point to the $192 million worth of lobbying collectively expended by over a hundred pharmaceutical, medical device, and biotech companies on this and related pieces of legislation. The goal of this lobbying, Lupkin and Findlay assert, was to give the FDA “more discretion” in deciding how new drugs and other treatments gain approval for clinical use – presumably saving a great deal of money for the companies that develop them. Adding weight to their assertion is the fact that President Trump is reportedly considering venture capitalist Jim O’Neill for FDA commissioner. Mr. O’Neill is strongly supported by libertarian conservatives who see FDA regulations as inordinately expensive and cumbersome, so it seems reasonable to worry about how Mr. O’Neill would weigh safety against profit in applying his “discretion” as head of the FDA. On the other hand, under a wise and appropriately cautious commissioner with a healthy respect for scientific evidence, we might hope that maintaining high safety standards and reducing the current staggering cost of drug development are not mutually exclusive.

Additionally, Dr. David Gorski writes of one provision of the Cures Act that appears to specifically benefit a stem-cell entrepreneur who invested significantly in a lobbying firm pushing for looser approval standards at the FDA. Once again, it is not unreasonable to suspect that there is room to reduce cost and bureaucratic red tape without adversely impacting safety. And in fairness to the eventual nominee for FDA commissioners, previous commissioners have not been universally praised for their alacrity in getting promising treatments approved efficiently… at least, not within the financial sector. Still, the concerns expressed by medical professionals and regulatory experts over the FDA’s continued intellectual autonomy and ability to uphold rigorous safety standards are quite understandable, given the new administration’s enthusiasm for deregulation.

It appears that this law will also allow pharmaceutical companies to promote off-label use of their products to insurance companies without holding clinical trials. Additionally, pharma companies can utilize “data summaries” instead of detailed clinical trial data for using products for “new avenues”. It is possible that these provisions were created with the NIH basket trials in mind (details here). However, as Dr. Gorski argues, without clinical trial data, off label use of drugs will be based on “uncontrolled observational studies”, which, while beneficial for pharma companies, are risky for patients from the perspective of patient advocacy groups. These fears are not without evidence – a recent article from STAT describes how the off-label use of Lupron, a sex hormone suppressor used to treat endometriosis in women and prostate cancer in men, is resulting in a diverse array of health problems in 20-year olds who received the drug in their puberty.

Another “Easter egg”, albeit unpleasant, awaits scientists and policy-makers alike. Buried in Title V of the law is a $3.5 bn cut on Human and Health Services’ Prevention and Public Health fund, without a proper explanation added to such an act. Given the outcry on the lack of public health initiatives in the Precision Medicine Initiative, one is again left to wonder why 21st century cures are focusing only on treatment and drug development and not on policies directed towards promoting public health and prevention of diseases.

In conclusion, the implementation of this law will largely depend on the current administration. With the NIH budget for FY2017 still up in the air, the confirmation of nominees still hanging in balance, this law is far from being implemented. Based on the provisions, it appears that overall biomedical funding will be boosted in particular fields, designated “priority research areas”. However, it shouldn’t fail an observant reader that this bill also seems to allow pharma companies a higher chance to exploit the consumers. It, therefore, still remains a question of whose priorities (consumers/patients vs. investors/corporations) are being put forward first and the answer, in our humble opinion, will be determined by a dialogue between the people and the government.

Sources/Further Reading –

- Full text of 21st Century Cures Act

- Summaries of sections

- NIH Director Dr. Francis Collins’ perspective in NEJM

- https://www.statnews.com/2016/12/05/21st-century-cures-act-winners-losers/

- http://rescuingbiomedicalresearch.org/blog/21st-century-cures-act-now-law/

- Dr. David Gorski’s rebuttal

- http://www.npr.org/sections/health-shots/2016/12/02/504139105/winners-and-losers-if-21st-century-cures-bill-becomes-law