HAVEN: A Unity-based Virtual Robot Environment to Showcase HRI-based Augmented Reality [PDF]

Due to the COVID-19 pandemic, conducting Human-Robot Interaction (HRI) studies in person is not permissible due to social distancing practices to limit the spread of the virus. Therefore, a virtual reality (VR) simulation with a virtual robot may offer an alternative to real-life HRI studies. Like a real intelligent robot, a virtual robot can utilize the same advanced algorithms to behave autonomously. This paper introduces HAVEN (HRI-based Augmentation in a Virtual robot Environment using uNity), a VR simulation that enables users to interact with a virtual robot. The goal of this system design is to enable researchers to conduct HRI Augmented Reality studies using a virtual robot without being in a real environment. This framework also introduces two common HRI experiment designs: a hallway passing scenario and human-robot team object retrieval scenario. Both reflect HAVEN’s potential as a tool for future AR-based HRI studies.

EV3Thoughts Sensors through Zoom

In this project, I developed a tool to visualize robotic sensors using Augmented Reality features using Unity3D and display the augmented data through Zoom for online learning.

The robot (EV3) communicates with a Unity-based application using MQTT (Message Queue Telemetry Transport) via publish-subscribe based messaging. The Unity application subscribes to a topic that the EV3 publishes containing the sensor values and updates the visualizations.

We are also viewing the robot and the augmented reality features using Zoom for remote learning. The robot is controlled using Google Slides. This feature is created by a staff member of Tufts University.

EV3Thoughts is currently in development.

R.A.I.N. – A Vision Calibration Tool using Augmented Reality [PDF]

I made a mobile app prototype that lets a person calibrate/reprogram a robot’s perception of objects. Pretty much every robot that has some visual sensor will have algorithms that make sense of the raw sensor data. For example, a depth measurement that picks up a spherical cluster of points with an approximated radius could indicate that the robot is seeing a basketball, Ping-Pong ball, or something like that. This method is one way a robot can tell what is what in the world.

Sometimes a robot cannot recognize an object even if it’s in front of them because their object detection algorithm may contain parameter limits that are usually hardcoded. Those parameters can be changed but only if you know where to look within the robot. A computer vision expert can do this, but it’s almost impossible for everyone else.

The app, R.A.I.N. (Robot-parameters Adjusted In No-time) works with PointCloud messages and lets anyone adjust these parameters and received visual feedback immediately so that a robot can continue operating adequately within its environment. This project will be used in human participant studies later in the year.

Augmenting Visual Signals to Convey Robot Motion Intent in a Shared Environment

In this project, we modify a mobile robot with augmented reality (AR) signals to reveal navigation plans to an individual with an AR device. In the photo, a person wearing a Microsoft HoloLens is able to see the Turtlebot’s costmap (dotted grid), path trajectory (yellow dotted line), and laser scan data (red dots). We are conducting a human study to see how participants perceive and utilize this data when encountering a robot seen for the first time.

Videos of the different visuals: [Path Trajectory][LaserScan][Costmap][People Detection]

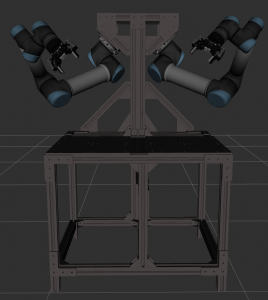

In this project, I plan to apply augmented reality gestures to the dual Universal Robotics Arm 5 (UR5) setup as seen in the photo. Currently, my lab and I are setting up the motion planning configuration files to run simulations in Gazebo.

In this project, I plan to apply augmented reality gestures to the dual Universal Robotics Arm 5 (UR5) setup as seen in the photo. Currently, my lab and I are setting up the motion planning configuration files to run simulations in Gazebo.

Videos: [Motion Planning]