EmpathyBot

EmpathyBot

Your new best friend: a robot that gives you an encouraging message based on your emotions.

Caroline

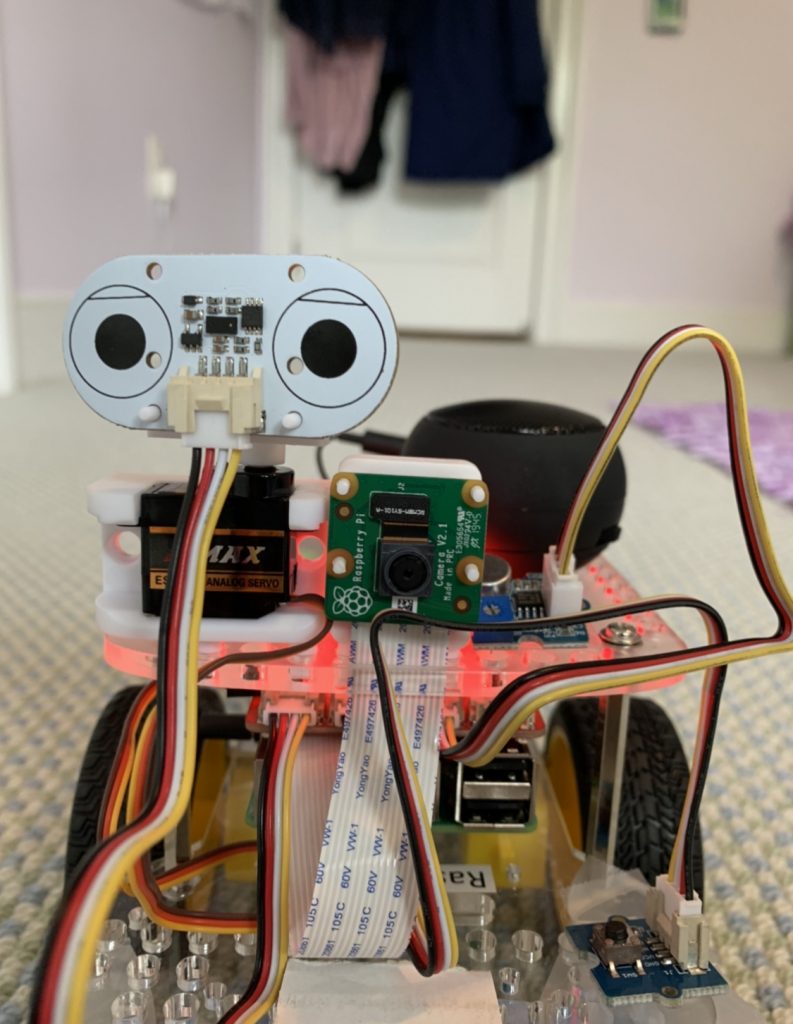

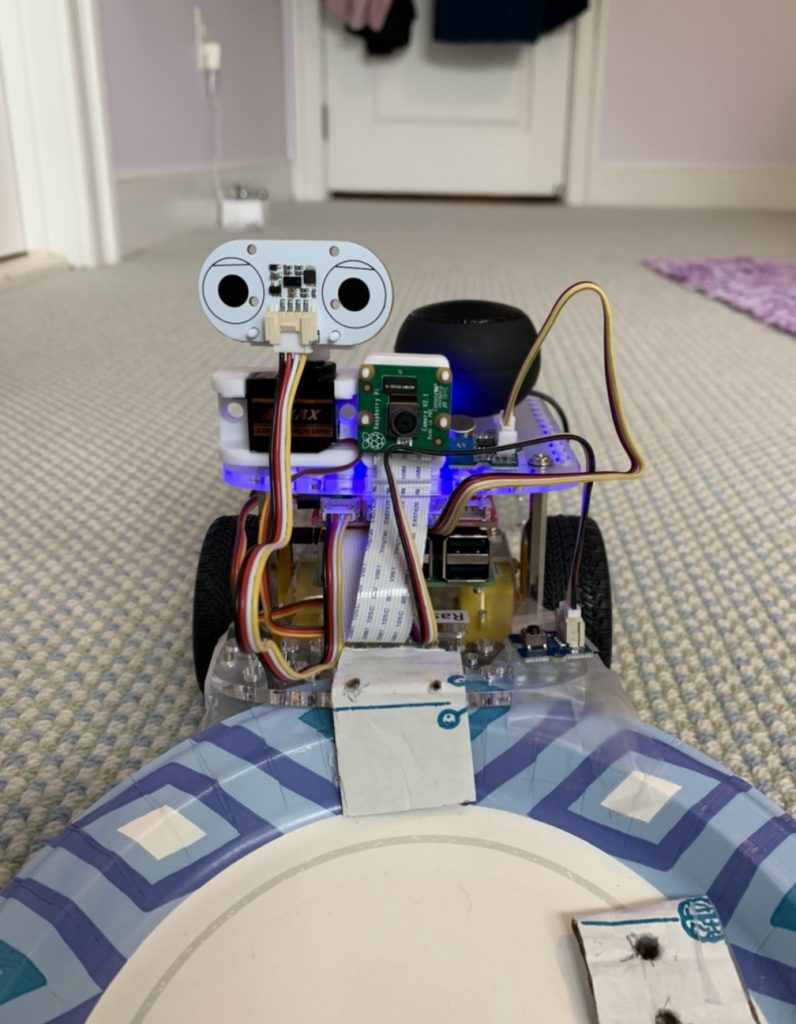

First, the robot drives up to you and stops about 20cm away, using the readings from its distance sensor. Next, the robot introduces itself using the speaker function. When the robot is ready to empathize, it snaps a picture of you, using the raspberry pi camera, and determines your emotion, based off of its knowledge from Google Cloud Vision API. Using sets of conditionals, the robot responds differently according to your perceived emotion. The robot has corresponding verbal responses and LED colors to happiness, sadness, anger and surprise. Also, when you are out of the frame, or the camera fails to detect your face, the robot responds accordingly and asks to try again. Finally, when the robot has completed its analysis of your emotions, it returns to its original position by driving backwards.

this is so cool!! good job working with a new API and making it drive around, and I love the video it’s awesome!!

Thank you!! I think I had a little too much fun making the video haha

A very powerful robot that could have many applications even outside of robotics

Thank you, that’s very inspiring!!

Nice work with the combining the camera and Google API. Also, Oscar winning acting in the video 🙂

Thank you– acting is clearly a specialty of mine as shown from this video (not really at all haha)

I couldn’t have done it without you!

great! I didnt even think about using an API in that way. very cool.

Thank you! Google Cloud Vision has some really cool applications!

I think its really cool and fascinating that you were able to work with the camera that way

Thank you! I really enjoy using the camera during this camp!

A very creative use of APIs to help make a wellness robot that could help people and make a difference!

That is an awesome idea!! I hadn’t thought of that before! That reminds me of the PARO robot used to treat depression that James Staley talked about– super cool!

Cool robot espcially since you used a new API

Thank you– asking for help was definitely a life saver

Has a lot of great applications

Thank you– I’m looking forward to exploring some of them!

That use of apis is cool

Thank you! Google vision has so many applications– check out Caroline Baillie’s project for a different application of the API!

Really cool how it analyzes your facial expressions!

Thank you! I couldn’t have done it without your help!

That worked so well! Nice work!

Thank you! It definitely took a lot of tweaking, but once the google API worked, the robot became very good at recognizing expressions!

What a powerful robot! Great work for just 2 days

Thank you! I’m glad it worked so well– I was definitely a little nervous about it at first.

So cool!

Thank you!! I loved your project, too! Caroline and Google API twins!

This is super cool, I love the use of the LED’s!

Thank you! I had a lot of fun with the LEDs!

Awsome use of APIs

Thank you! APIs are so cool!

This is a cool idea. I like that the robot says something to you after analyzing your face instead of just leaving you sad.

Thank you! The original code actually had the robot respond “do you need a hug?” to sadness, which I though was really cute. But, I wanted to save that response for a further developed version of this robot where it maybe had arms or something to interact/ hug you with (only it would probably be sort of a mini hug because it would have to be really big to hug a human).

Great robot and very creative! This is really cool!!

Thank you!! I’m excited to explore more creative applications later!

Very outside of the box! Nice!

Thank you! The original idea from Dexter really stood out to me!