Test-Driven Software Development

Abstract

Test-Driven Development (TDD) emphasizes writing tests for a software module before writing the implementation of that module. This article lays out the TDD method, contrasts its benefits and weaknesses, and provides an example of the TDD based workflow.

Introduction

What is Test-Driven Development?

Test-driven development (TDD) is the practice of writing tests for a software module and then using the tests as a guide for writing a reliable implementation for the software module (Olan, 2003). This methodology is know as “test first” development, which contrasts with the common “test last” method of software development that dictates that software should be implemented first and then tested for correctness after completion (Vu, Frojd, & Shenkel-Therolf, 2009). TDD is one of the many tenants of extreme programming and the agile development process (Agarwal & Umphress, 2008). The method promotes rapid iterative development cycles to produce system features as rapidly as possible.

Why is Test-Driven Development useful?

Test-driven development is used in practice to guide implementation development, verify program functionality, and improve long-term software reliability.

Writing unit tests for software prior to implementing the software functionality allows a developer to specify how the software feature should function before adding the feature to the code base. Good test harnesses, such as the JUnit framework, will run tests automatically and report the success of tests to the programmer, helping the programmer write a correctly functioning implementation (Olan, 2003). This guidance can be particularly helpful when developing a system while learning a new technology.

By writing implementation with the goal of passing tests, the software developer can be assured that the code performs as dictated by the unit test based contract. TDD leads to high test coverage of a code base because code is only added to satisfy a test that has been written. Program functionality can be easily verified through the act of following TDD methodology because all added code is associated with at least one test. A study into the efficacy of TDD performed by Microsoft presents a boost of confidence in code correctness when developing with TDD (Bhat & Nagappan, 2006).

The automated unit tests written during TDD continue to be run as a project develops, ensuring that new features do not break existing functionality. Continuous project-wide tests ensure that each individual component of a large project functions as expected and maintains code base reliability as the project expands and ages. Adding a new feature is simply a matter of writing tests for that feature, writing the implementation to pass those tests, and then running system-wide tests to verify that all components of the software continue to perform as expected. A study into the efficacy of TDD performed by Microsoft found a large decrease in code defects when using TDD (Bhat & Nagappan, 2006).

Are there Issues with Test-Driven Development?

TDD is not a magic bullet when it comes to the task of ensuring software correctness. There are some pitfalls and shortcomings of which those following a TDD based workflow should be aware.

Inherent in TDD is the need to write more code up front. Tests must be written before implementation, and the system cannot be used until the implementation is complete. If tests are not written well, then redundancy in testing code is probable, resulting in unneeded code that the programmer must spend time writing (Olan, 2003). The Microsoft study found that in some cases the amount of code required by TDD nearly doubled (Bhat & Nagappan, 2003). Writing more unit tests to gain experience with the concept is the best way to reduce the redundancy of TDD code, so this is an issue that occurs most frequently early in the switch to TDD.

Poorly written tests inspire false confidence in code functionality. Implementation that is not intended to pass a specific test runs the risk of introducing bugs that are not detected. Writing better tests to specify all of the features of a code addition and minimizing added code are the best ways to counter these risks. A study performed by the Indian Institute of Technology found that, in an academic setting, code quality could decrease with TDD (Gupta & Jalote, 2007), likely due to this very issue. This issue is commonly found when a developer is learning how to effectively use TDD, so again experience at developing with test-based contracts diminishes the risk of overconfidence in code.

Some systems do not lend themselves well to the style of TDD. While possible to write “test-first” software for application UIs, network programming, security protocols, etc., it is difficult to adequately cover the large breadth of possible interactions with these programs. In this case fuzz testing could be used to generate large numbers of test cases, but this is beyond the scope of this article.

The Test-Driven Workflow

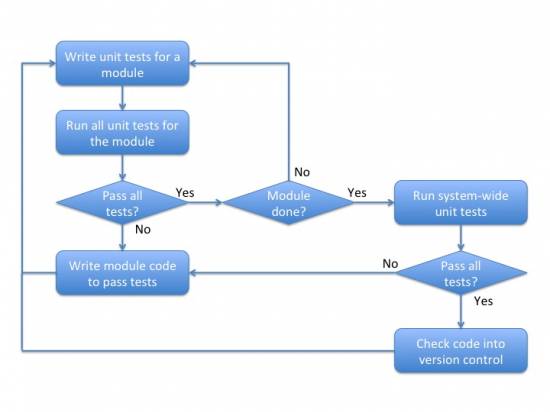

The TDD workflow involves rapid iterations of writing tests and functionality of small portions of code. At a glance the workflow follows this pattern (Olan, 2003):

- Write unit tests specifying the behavior of a module.

- Run all tests. If all tests pass go to step 1, else continue to step 3

- Edit the implementation to attempt to pass the failing tests. Go to step 2.

The software developer runs the tests to find failing unit tests for the module and then edits the implementation to pass the failing test cases. The implementation is edited to add the minimal amount code required to pass the tests. Writing the minimal code require to pass the tests is important, it simplifies implementation and prevents the addition of untested features to the code. The test-implement-repeat cycle continues until an implementation is finalized that successfully passes all unit tests.

After the module has been finished, system-wide unit tests are run to ensure that the existing code still functions properly. Integration tests should be written and used to check that new modules interact properly with other modules. These integration tests are essentially system wide unit tests that check specific interactions within the project. Any issues should be resolved with another series of test-edit iterations, again modifying the minimal amount of code to get the system tests to pass. Once all system tests pass, the code can be committed to any version control system in use (such as git, mercurial, or SVN) and work on the next module can begin.

Figure 1

Flow chart describing the development process followed when using TDD, based upon the process used by Microsoft (Bhat & Nagappan, 2006).

Test-Driven Development in Practice

IBM has adopted TDD for some internal projects, and has done a study comparing the “test-first” development method to the “test-last” method. Developers saw a 50% decrease in defects per thousand lines of code, a modular system capable of integrating new features late in the development process, and the natural development of a significant suite of regression tests. Engineers participating in the study were very positive about TDD and have continued to use TDD on IBM projects. (Williams, Maximillien, & Mladen, 2003)

Microsoft performed a similar study into the efficacy of TDD. Engineers using “test-first” development methods saw a factor of 4 decrease in defects per thousand lines of code for a 15% increase in development time. Microsoft concluded the study claiming that TDD is effective at reducing defects if the developer can accept a significantly increased development time. The study states that the decision to use TDD is left to the individual project groups within the organization, allowing those that have seen significant benefit to continue to use TDD (Bhat & Nagappan, 2006).

As an anecdote, the author has found TDD to beinvaluable in when writing a distributed file system for a computer networks class. Diligent adherence to the TDD method created a robust testing framework alongside the required server and client programs. Late one night a bug was introduced into the server program; occasionally the server would write a mangled portion of the file onto disk, subtly changing the file being transferred. The symptoms of the problem were few and far between and were not indicative of the root cause of the problem: a null terminator was not being added to the proper location in the final chunk of the file. The bug was quickly found by using the failing test cases in the unit tests created while following TDD. Without those tests, the bug would have taken an order of magnitude more time to find.

The Case for Test-Driven Development

TDD promotes writing unit tests before implementation with the intent of reducing software defects without increasing development time. An engineer with some experience and a willingness to invert the standard “test-last” process can achieve huge benefits in defect reduction and confidence in code correctness. TDD does have a learning curve and its share of issues, but with practice and forethought these pitfalls can be avoided. While the process is significantly different than the usual development route it is still relatively straightforward and can be applied to a wide range of projects.

There are promising case studies in industry and academia that supply evidence for the utility of TDD. Software to facilitate the adoption of TDD into project development has been created for many languages and environments. Many development shops and software communities are subscribing to TDD as their main development process; web developers using the popular Ruby on Rails are a particularly strong example of a community that has embraced TDD. “Test-first” development is rapidly gaining a place in industry and academia as a valid development process.

There is little to lose and much to gain from adopting the test-driven development workflow. Even if the development style does not turn out to integrate well with a project, the developer gains a new perspective in the attempt.

References

- Agarwal, R., & Umphress, D. (2008). Extreme programming for a single person team. Proceedings of the 46th Annual Southeast Regional Conference (ACM-SE 46), 82–87. New York, NY, USA: ACM. DOI: 10.1145/1593105.1593127

- Bhat, T., & Nagappan, N. (2006). Evaluating the efficacy of test-driven development: industrial case studies. 2006 ACM/IEEE international symposium on Empirical software engineering (ISESE), 356–363. New York, NY, USA: ACM. DOI: 10.1145/1159733.1159787

- Gupta, A., & Jalote, P. (2007). An Experimental Evaluation of the Effectiveness and Efficiency of the Test Driven Development. First International Symposium on Empirical Software Engineering and Measurement (ESEM), 285–294. DOI: 110.1109/ESEM.2007.41

- Olan, M. (2003). Unit testing: test early, test often. Journal of Computing Sciences in Colleges, 19(2), 319–328. Retrieved from ACM Digital Library

- Vu, J. H., Frojd, N., Shenkel-Therolf, C., & Janzen, D. S. (2009). Evaluating Test-Driven Development in an Industry-Sponsored Capstone Project. Sixth International Conference on Information Technology: New Generations (ITNG), 229–234. DOI: 10.1109/ITNG.2009.11

- Williams, L., Maximilien, E. M., & Vouk, M. (2003). Test-driven development as a defect-reduction practice. 14th International Symposium on Software Reliability Engineering (ISSRE), 34–45. DOI: 10.1109/ISSRE.2003.1251029