What is the relationship between Human Factors Engineering and design? What separates good design from bad design? How does a designer do design? As a cognitive psychologist, a consumer psychologist, and the previous director of a leading Human Factors Engineering program, I am developing a theoretical framework to understand the past and future trajectory of the field.

The Nature of Design

This framework is predicated on the idea that designers “do design” by bringing creativity and constraint/opportunities to a problem space. As the architect Christopher Alexander (1964) notes, a designer looks for a form that “works” within a “context.” Alexander’s context is what I refer to as the “constraint and opportunity space” within which a designer works. While constraints may sound like bad things, most designers know that it is only through uncovering and applying constraints (and identifying opportunities) that a design is able to hit the mark. Sometimes constraints and opportunities might come from the properties of materials (e.g. how flexible or heat-resistant it might be), or from manufacturing processes, or other physical properties of the world around us. In other cases, these constraints and opportunities might be related to the human user. It is these human-based constraints that are very (uniquely?) important to the Human Factors Engineer. In an abstract sense, one of the major roles of human factors engineering is to uncover constraints and opportunities that can inform design (of products or of systems). Over the past 100 years, the field of human factors engineering has moved through several notable phases. And, by understanding this trajectory we can gain a deeper understanding of both where the field has been and where it is going.

Human Factors 1.0

In the early days of Human Factors Engineering (HFE), early- to mid-20th century, designers focused on aspects of human physical limitations and opportunities to inform their work. When designing the shape of a control, the size of the chair, the angle of the keyboard, or the width of a doorway, a designer would be informed by known aspects of the physical size, limitations, or abilities of humans. Classic texts like The Measure of Man (Dreyfuss, 1960) emerged, and designers had measurement techniques and reference books to which they could refer.

These early HFE designers did not rely simply on aesthetic sensibilities or material possibilities and limitations. Instead, they took a systematic approach to figuring out all the relevant human physical constraints and opportunities. In some sense, this earliest form of human factors engineering was very much concerned with ergonomics. This approach is what I refer to as “Human Factors 1.0”.

Practitioners of Human Factors 1.0 expanded their scope beyond simple physical systems — it was not all about chairs, desks, cockpits, and displays. They applied the same physical tools, methods, and understandings to designing a wide range of complex systems, from the size of a font, to the color of a display, to the specifics of an auditory alert were informed by knowledge of human physical sensory systems. Methods like behavioral or hierarchical task analysis and signal-detection theory soon entered the scene. Over time, hospitals, airplanes, and a wide range of complex human systems got safer, better, and more fit for purpose.

Human Factors 2.0

In the 1960s and 1970s, as the “cognitive revolution” swept through psychology, designers began to realize that it was not only the physical interaction and sensory aspects of humans that should constrain design. In the world of Human Factors 2.0, designers continued to acknowledge and uncover physical constraints to inform design, but they added to the mix the cognitive limitations of humans. Human memory, attention, and decision-making were all ripe areas for research and discovery. Shannon’s information theory (Shannon, 1948) helped inform the HFE toolkit, and information-presentation and decision-making entered the scene. Task analysis was expanded to include cognitive-task analysis. And even the world of physical design felt the impact.

Consider, for example, the design of a computer mouse. A designer working under the older framework of Human Factors 1.0 would design a mouse such that it was the right size to be grabbed and that its use would not cause carpal tunnel syndrome. But the designer working in the framework of Human Factors 2.0 would also ensure that there were not 12 buttons on the mouse, because, while all 12 might be nearly equally press-able (from a physical perspective), few ordinary humans could control and map all these buttons to unique and memorizable functions. Human cognitive structures could not easily support such mappings.

Later, as digital displays got more complex, the designer operating in the Human Factors 2.0 framework had a great deal to offer this burgeoning field. For example, when designing a website or an app, tools such as card-sorting – and theories such as information theory – could be used to understand the limitations of human memory and cognition and thus inform the breadth and depth of menu structures or selection pathways. Attentional limitations, another purview of Human Factors 2.0, could further inform alerts, visualizations, and display elements. Today many HFE professionals apply their skills in the world of user-interface (UI) and user-experience (UX) design.

Human Factors 3.0

As we enter the current period I see our constraint-space (and the opportunity-space) expanding beyond the physical and cognitive constraints of Human Factors 2.0 — and a new framework is emerging. It is important to lay out this new framework — to make it explicit — and thus to further develop the tools, methods, and approaches that will empower the Human Factors 3.0 movement.

Under Human Factors 1.0, physical limitations/opportunities informed design. Under Human Factors 2.0, physical and cognitive limitations/opportunities informed design. In Human Factors 3.0, we understand that emotional limitations (and aspirations/opportunities) must further inform design. When designing a computer mouse, for example, one now also considers the emotional experience of use: the texture of the mouse (it should promote a feeling of control and mastery), the click should feel rewarding, and even the look, feel, and brand must create the emotional tags desired by the end-user.

This movement is already well underway. Some could claim that this new framework has emerged because of the “emotional revolution” that has been underway in psychological research for the past several decades. Or, it could be the zeitgeist of the time. Or it could be the emotional catering desired by millenials and later generations: “look and feel” has become the mantra of modern products. Or it could be the fact that product manufacturing has reached such a high art that users now desire mass customization. Or it could be that functional differences between products no longer distinguish a leading product from its nearest competitor. Or it could be the fact that the tools to actually do emotional design have emerged on the scene. Most likely it is a combination of all these factors that have led us to this important revolution. Theorists and practitioners like Don Norman (e.g. Emotional Design: Why We Love (or Hate) Everyday Things (2004)), have been pointing in this direction for more than a decade now. And, tools — like the various forms of Design thinking and Human-Centered Design — have emerged and proved important and fruitful. We must acknowledge that we are now working in the framework of Human Factors 3.0 and that the emotional needs, limitations, and aspirations of end-users must begin to inform design decisions.

As designers and human factors engineers we now need the tools and methods required to help us gather the emotional requirements necessary to inform design decisions. Such tools range from the physical (GSR, EEG, eye-movements, fNIRS, MRI, etc.) to the psychophysical (e.g. FACS). And perhaps another paper could be written detailing the wealth of new analytic techniques that can help make sense of such recorded data (voice analysis, sentiment analysis, big data, machine learning, etc.). But here I focus on two methods for uncovering and working with emotional needs within the Human Factors 3.0 framework.

Emotional Task Analysis

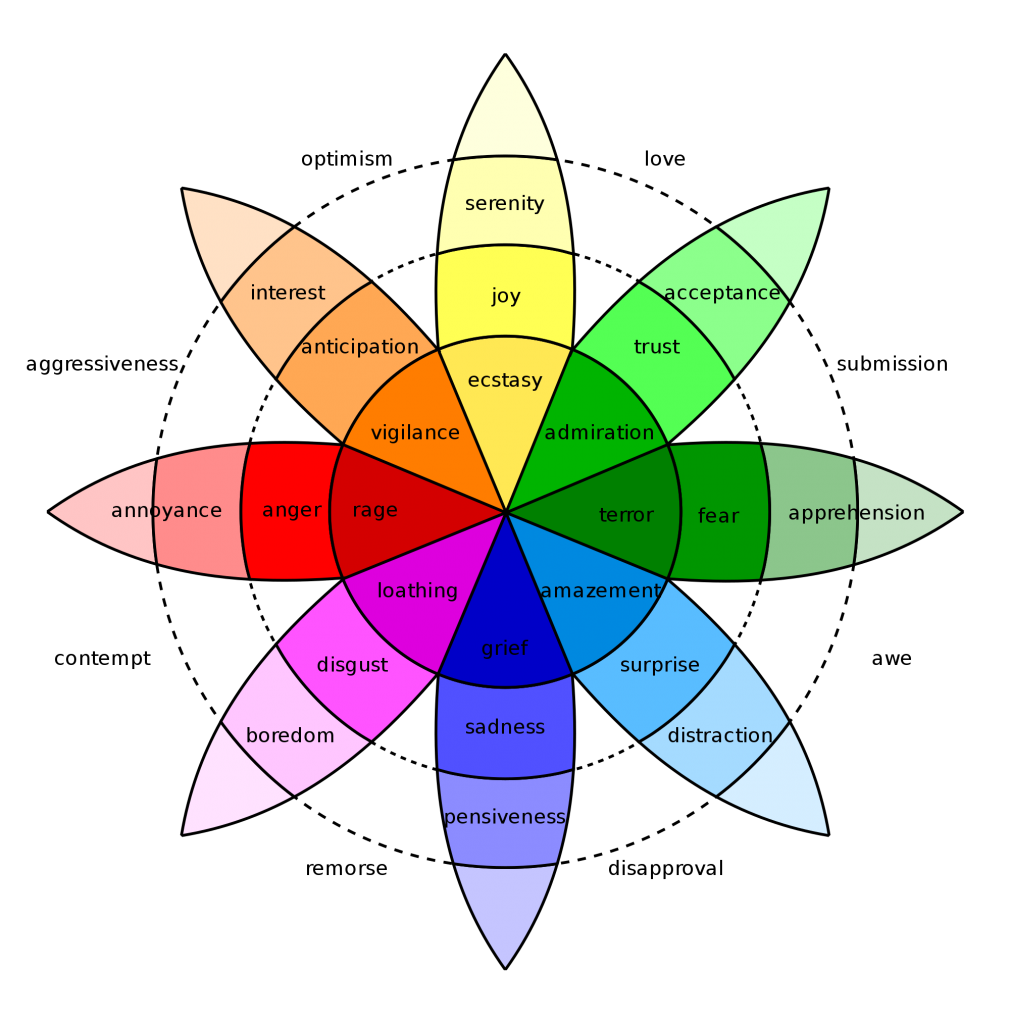

In the Human Factors 1.0 framework, one standard technique or method was behavioral task analysis (BTA). To do a BTA the practitioner would look at a complex task (e.g. performing maintenance on a washing machine) and would analyze all the steps/tasks involved — breaking everything down to a fine-grained level that could inform the question at hand. A BTA could be used to help create training programs, identify opportunities for improvement, and a wide range of other design goals. The cognitive revolution spurred the mutation of BTA into cognitive task analysis (CTA). No longer were the practitioners of Human Factors 2.0 satisfied with a behavioral task analysis — now they began to map-out the cognitive aspects of task performance. Such cognitive task analysis could uncover a whole new class of cognitive design constraints: decision points, dependencies, categorizations, and information needs. Both BTA and CTA are essential tools in the HFE toolkit. As we move into Human Factors 3.0, I here introduce the method of Emotional Task Analysis (ETA). When designing a system, Human Factors 3.0 practitioners must perform: a behavioral task analysis (to understand physical limitations), a cognitive task analysis (to understand cognitive limitations), and an emotional task analysis (to understand emotional limitations/aspirations). It’s not rocket science: it is just another way of thinking about any compound task.

To perform an emotional task analysis you break down the task into steps (as small as required) and then you identify any emotions that might be evoked during (or before/after) each step. For example, for a software app to onboard a new user, there will be several steps where the user might feel uncertain, or confused, or untrusting, or happily surprised. Once these emotions are understood (and explicitly mapped) the designer can then begin to design around them. The artful designer might want to add features (information, icons, sounds, or other mechanisms) to either alleviate these emotions (such as the various trust icons used on websites) or to accentuate them (such as text alerts that congratulate you for creating an account). The world of behavioral economics and nudge-theory has a whole raft of potential design solutions that can then come into play. But, it is important to note, it is the role of the human factors engineer to uncover these requirements or constraints for the designer.

While this approach is clearly important for designing tools and systems like websites, it plays an even bigger role in the realm of social robotics. For example, imagine we are helping design a “social” robot to perform home healthcare. When the robot attempts to wake the patient for a doctor’s visit, the emotional task analysis tells us that the patient might be confused or upset. So a designer might have the robot say or do something to increase calm and trust. Or, if the robot is working with a dementia patient, the designer might need to acknowledge the near constant confusion and add requirements to design features that moderate every action. Perhaps the robot should play music? Or perhaps the robot should have a face that can be used to immediately convey various emotional states.

In a recent class I taught at Tufts (ENP162: Human-Machine System Design), I asked students to use ETA to design a social robot. One student, Mikayla Rose, used ETA to help inform the design of a shopping-assistant robot (S3: Sally the Shopping Sidekick). A portion of her ETA can be seen in Figure 1.

Notice that the student uncovered various emotional constraints to help inform the robot design. This student (a dual major in Human Factors Engineering and Studio Art) even went one step further and crafted an icon set (Figure 2) that could be used to help achieve the design constraints (faces to evoke the desired emotions) that were uncovered in her ETA. Performing BTA, CTA, and ETA are all essential steps in helping to inform the design of a social robot. As I point out to my HFE students, getting these things right will be essential for effective social robots. The mechanical and electrical engineers clearly have vital contributions to make (to ensure the machine can move, sense, and interact). And computer scientists also have vital contributions to make (to write the software that orchestrates everything). But, the human factors engineer also has vital contributions to make in designing the behavioral, cognitive, and emotional repertoires of the robot.

Emotional Spatial Analysis

ETA can be used to map the emotions (evoked or desired) at various stages in any task. But emotions are also evoked across spaces and times. Another method that can help is emotional spatial analysis (ESA). In this technique the human factors engineer uses various tools to uncover such spatio-temporal emotionality.

An example will help make this clear. In the UK a government agency was concerned that visitors who came to their parks and other outdoor spaces sometimes chose not to return. We decided to approach this as a human factors design opportunity and ask: “What is it about their experiences in the spaces that made them emotionally unsatisfied?” Perhaps it was the long boring walk through the field before arriving at the site? Or maybe it was the craggy path they had to ascend? Or perhaps an area that seemed interesting but lacked any signs or information. A PhD student of mine, Rob Laing, created a simple device: it was an ergonomic hand-grip connected to a flat box with a large dial on its top (see image).

Inside the device there was a GPS, a potentiometer, a bluetooth chip, batteries, and an arduino brain. The student asked people to walk along set paths in nature and to indicate – via the dial – their moment-to-moment emotionality. Similar to the way media channels might use groups of people with dials to indicate their moment-to-moment liking/disliking during a debate. In this case, as the participants traveled through space and time, if they were feeling negative/bored/depressed, they would turn the dial all-the-way-left and if they were feeling positive/excited/elated, they would dial it to the right. He collected data and made heat-maps of the resulting data (see example). These heat-maps could then be used to identify specific spatial positions where emotions were not as desired (for example, more negative in valence). Designers could then create various forms of interventions (signs, guides, landscapes, etc.) to smooth out, or otherwise enhance, the emotional terrain.

Human Factors X.0

If these iterations of Human Factors Engineering are additive – i.e., Human Factors 3.0 includes all the aspects of 1.0 and 2.0 – where will we go next?

As we move into the future, we begin to see a Human Factors field that strives to include all the other aspects of human experience in the design process. For example, a designer might consider the experiential, environmental, social, technological, or political implications of a design choice. These additional dimensions can add new constraints to the designer’s toolkit. And, each of these different dimensions will have new methods and tools to help uncover relevant constraints. One approach that we have found useful is to do a task-analysis around different dimensions of interest. We call this approach Multidimensional Task Analysis (MTA). MTA can encompass a wide range of task analyses. Some of the task analyses that we have found useful include:

- Informational task analysis (where, when, what info is needed?)

- Decisional task analysis (where, when, what decisions must be made?)

- Attentional task analysis (where, when, how should attention be focused?)

- Social task analysis (what social interactions are happening?)

- Teamwork task analysis (where, how, when, and what cooperative actions?)

Of course, there are many relevant methods beyond task analysis, but this approach demonstrates the broader theme around expanding our constraint spaces. As we move into the future of Human Factors Engineering there is no way to know what new sets of constraints might emerge to help inform design. Currently “emotional constraints” and “emotional design” are areas of needed focus – that is why I have explicitly called them out via the Human Factors 3.0 discussion. Of course, ecological, environmental, and sustainability constraints are also vitally important and are now being used to inform design. As we move into future iterations of Human Factors Engineering we must be ready to identify, consider, examine, measure, and use whatever dimensions are seen as relevant to the design challenges at hand. “Human Factors X.0” is this future, ever-evolving, multidimensional approach to human factors engineering. We must be ready to move transversally through design constraint space: acknowledging and (re)defining dimensions as we navigate the ever shifting seas of user-needs and internal/external constraints.

References

Alexander, C. (1964) Notes on the Synthesis of Form. Harvard University Press. Cambridge.

Norman, D. A. (2004). Emotional design: Why we love (or hate) everyday things. Basic Books.

Shannon, C. (1948). “The Mathematical Theory of Communication.” Bell System Technical Journal, 27: 379-423.

Tilley, Alvin R.; Henry Dreyfuss Associates; with an introduction by Stephen B. Wilcox. (2002). The measure of man and woman : human factors in design. New York :Wiley,