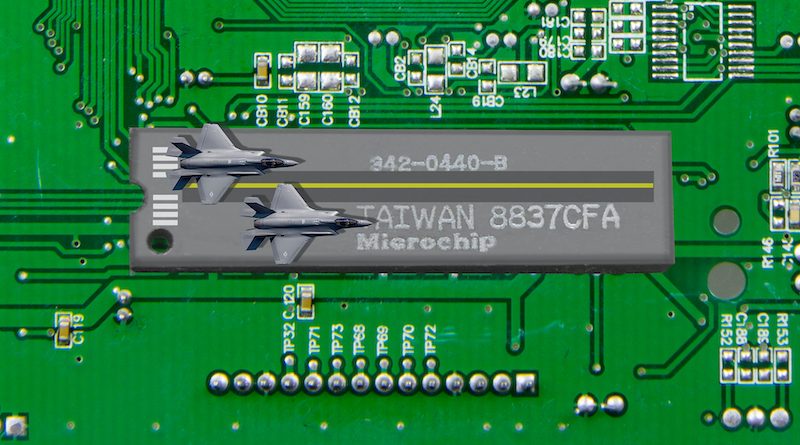

Are we reaching the limits of Moore’s Law? As costs increase, the future of the computing revolution is at risk

By Chris Miller, Associate Professor of International History at The Fletcher School

When Gordon Moore was asked in 1965 to predict the future, he imagined an “electronic wristwatch,” “home computers” and even “personal portable communications equipment.”

In a world of landlines and mechanical watches, this vision seemed as far-off as the Jetsons’ flying cars. Yet from Mr. Moore’s position running R&D at Fairchild Semiconductor, which at the time was the hottest startup in the new industry of making silicon chips, he perceived that a revolution in miniaturized computing power was under way. Today this revolution is at risk, because the cost of computing isn’t falling at the rate it used to.

The first chip brought to market in the early 1960s featured only four transistors, the tiny electrical switches that flip on (1) and off (0) to produce the strings of digits undergirding all computing. Silicon Valley engineers quickly learned how to put more transistors on each piece of silicon. In the middle of that decade, Mr. Moore noticed that each year, the chips with the lowest cost per transistor had twice as many components as prior years. Smaller transistors not only enabled exponential growth in computing power but drove down its cost, too.

The expectation that the number of transistors on each chip would double every year or so came to be known as “Moore’s Law.”

Sixty years later, engineers are still finding new ways to shrink transistors. The most advanced chips have transistors measured in nanometres – billionths of a metre – allowing 15 billion of these tiny electric switches to fit on a single piece of silicon in a new iPhone. Now, though, miniaturizing transistors is more difficult than ever before, with components so small that the random behaviour of individual atomic particles or quantum effects can disrupt their performance.

Because of these challenges, the cost of manufacturing tiny transistors is skyrocketing, calling into question the future of the computing revolution that Mr. Moore foresaw. For the past decade, the rate of cost decline per transistor has slowed or even stalled. Chipmaking machinery capable of manipulating materials at the atomic level has become mind-bogglingly complex, involving the flattest mirrors, most powerful lasers and most purified chemicals ever made.

Unsurprisingly, these tools are also eye-wateringly expensive – none more so than the extreme ultraviolet lithography machines needed to produce all advanced chips, which cost US$150-million each. Add up all the costs, and chipmaking facilities now run around $20-billion each, making them among the most expensive factories in history.

When we think of Silicon Valley today, our minds conjure social networks and software companies rather than the material that inspired the valley’s name. Yet the internet, the cloud, social media and the entire digital world rely on engineers who have learned to control the most minute movement of electrons as they race across slabs of silicon. The big tech firms that power the economy wouldn’t exist if in the past half century the cost of processing and remembering 1s and 0s hadn’t fallen by a billionfold.

A new era of artificial intelligence is dawning not primarily because computer programmers have grown more clever, but because they have exponentially more transistors to run their algorithms through. The future of computing depends fundamentally on our ability to squeeze more computing power from silicon chips.

As costs have ballooned, however, industry luminaries from Nvidia chief executive officer Jensen Huang to former Stanford president and Alphabet chair John Hennessy have declared Moore’s Law dead. Of course, its demise has been predicted before: In 1988, Erich Bloch, an esteemed expert at IBM and later head of the National Science Foundation, declared the law would stop working when transistors shrank to a quarter of a micron – a barrier that the industry bashed through a decade later.

Mr. Moore himself worried in a 2003 presentation that “business as usual will certainly bump up against barriers in the next decade or so,” but all these potential barriers were overcome. At the time, he thought the shift from flat to 3D transistors was a “radical idea,” but less than two decades later, chip firms have already produced trillions of the latter, despite the challenge of their fabrication process.

Moore’s Law may well surprise today’s pessimists, too. Jim Keller, a star semiconductor designer who’s widely credited for transformative work on chips at Apple, Tesla, AMD and Intel, has said he sees a clear path toward a 50-times increase in the density with which transistors can be packed on chips. He points to new transistor shapes and, also, plans to stack transistors atop each other.

“We’re not running out of atoms,” Mr. Keller has said. “We know how to print single layers of atoms.”

Whether these techniques for shrinking transistors will be economical, however, is a different question. Many people are betting the answer is “no.” Chip firms are still preparing for future generations of semiconductors with smaller transistors. But they’re also working on new ways to deliver more, cheaper computing power without relying solely on their ability to cram more components onto each chip.

One technique is to design chips so that they’re optimized for specific purposes, such as artificial intelligence. Today, the microprocessors inside PCs, smartphones and data centres are designed to provide “general purpose” computing power. They’re just as good at loading a browser as they are at operating a spreadsheet. However, some computing tasks are so distinctive and important that companies are building specialized chips around them. For example, AI requires unique computing patterns, so companies such as Nvidia and an array of new startups are developing chip architectures for these specialized needs. Specialization can provide more computing power without relying solely on smaller transistors.

A second trend is packaging chips in new ways. Traditionally, the process of implanting a chip in a ceramic or plastic case before wiring it into a phone or computer was the simplest and least important step in the chipmaking process. New technologies are changing this, as chipmakers experiment with connecting chips together in a single package, increasing the speed at which they communicate with each other. Doing so in new ways will reduce cost, too, letting device makers select the optimal combination of chips needed for the desired level of performance.

Third, a small number of companies are designing chips in house so that their silicon is customized to their needs. Steve Jobs once quipped that software is what you rely on if “you didn’t have time to get it into hardware.” Cloud computing companies such as Amazon and Google are so reliant on the speed, cost and power consumption of the silicon chips in their data centres, they’re now hiring top chip experts to design semiconductors specifically for their clouds. For most firms, designing chips in house is too hard and too expensive, but companies such as Apple and Tesla design chips in house for iPhones and Tesla cars.

Looking at these trends, some analysts worry that a golden age is ending. Researchers Neil Thompson and Svenja Spanuth predict that computing will shortly split along two different development paths: a “fast lane” of expensive, specialized chips and a “slow lane” of general-purpose chips whose rate of progress will likely decline.

It’s undeniable that the microprocessor, the workhorse of modern computing, is being partly displaced by chips for specific purposes, such as running AI algorithms in data centres. What’s less clear is whether this is a problem. Companies such as Nvidia that offer specialized chips optimized for AI have made artificial intelligence far cheaper and therefore more widely accessible. Now, anyone can access the “fast lane” for a fee by renting access to Google’s or Amazon’s AI-optimized clouds.

The important question isn’twhether we’re finally reaching the limits of Moore’s Law as its namesake initially defined it. The laws of physics will eventually impose hard barriers on our ability to shrink transistors, if the laws of economics don’t step in first. What really matters is whether we’re near a peak in the amount of computing power a piece of silicon can cost-effectively produce.

Many thousands of engineers and many billions of dollars are betting not.

This piece is republished from The Globe and Mail.